One of my favourite courses this semester, and by far the one I spent the most time on, was my software development course, CPEN 221. This course builds upon prior knowledge of programming, and teaches students how to write large scale, practical code, which is testable, maintainable, and functional.

Although this course is presented through the lens of Java, and students do become highly competent in that programming language, a focus is placed on concepts which are held in common by all modern programming languages. Examples from other relevant languages are used to develop a deeper understanding of course material, and make sutdents more comfortable picking up languages as needed.

This class puts its concepts into practice, by having students complete three “mini projects”. Each of these assignments is split into five tasks, which build upon each other, increasing in difficulty.

Typically, students will only complete the first three tasks, due to the large difficulty of the later tasks. However, I pushed myself to complete all five tasks for each of the mini projects, as I found the challenge rewarding, and enjoyed researching approaches to these challenges outside of a structured class setting.

In order, the mini projects were themed around Image Processing, Graphs, and Wikipedia. Course policy prevents me from posting source-code publicly, however, I am allowed to share my private GitHub repositories upon request.

Mini-Project 1: Image Processing

The first project was an introduction to writing high quality specifications for methods, as well as designing test suites to ensure both feature coverage as well as branch coverage. This project focused on manipulating images as grids of RGB colour pixels and performing operations on this set. The first three tasks were relatively simple, converting images to a reduced colour palette, and comparing images on a pixel-by-pixel basis.

The fourth task was implementing a green screen algorithm which would operate over only the largest connected span of green. This involved writing a recursive breadth first search algorithm, recognizing the challenges associated with explicit recursion under finite computing resources, and translating the algorithm to work with an explicit stack datastructure.

The fifth task was writing code which would automatically align images containing text to be horizontal. This involved independently researching into Fourier domain representations of images – with which https://plus.maths.org/content/fourier-transforms-images provided an invaluable resource, managing runtime of algorithms, and testing edge case behaviour of code.

The algorithm worked by computing the 2d fourier transform of the image, and finding the ray outwards from the center with the highest value. This corresponded to the normal of the text, from which the required angle with which to rotate could be determined.

Mini Project 2: Graphs

For this project, we focused on inheritance, writing reusable code, and algorithms.

The first portion of the assignment involved writing two implementations of a Graph interface, one mutable version based on the Adjacency List representation, and one immutable version based on the Adjacency Matrix representation.

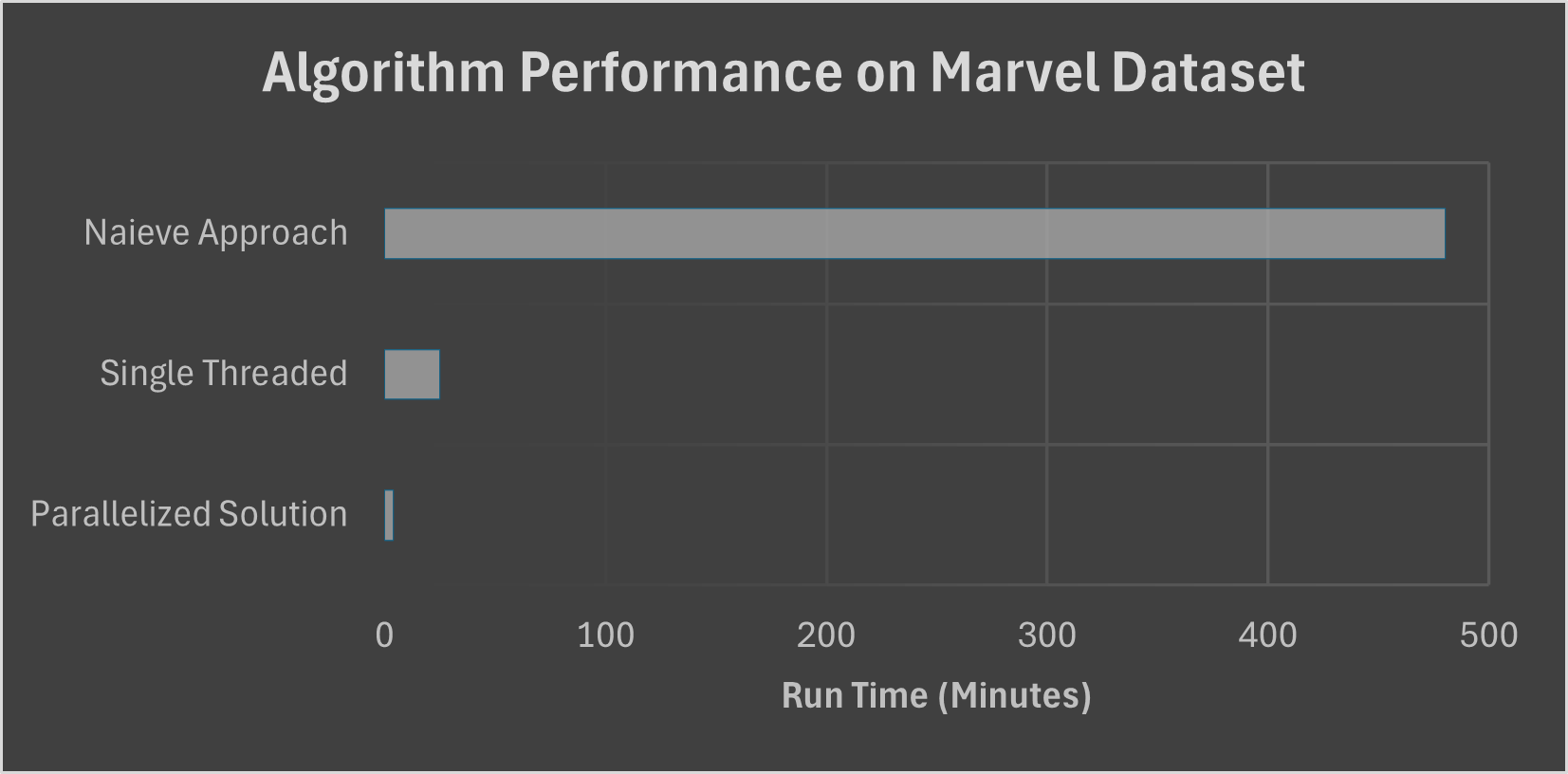

Following that, we wrote algorithms to determine shortest paths across graphs, the diameter of a graph, and the central point of a graph. For these algorithms, I pushed above and beyond, writing algorithms which were optimized for time complexity as well as being easily parallelizable using Java’s ParallelStream class. This allowed my algorithm to find the center of a graph with over 150,000 edges in only 4 minutes, compared to the 8 hours typical of other submissions.

Mini Project 3: Wikipedia Server

This project focused on writing a server which could act as an intermediary between a client and Wikipedia, tracking statistics, providing additional search functionality, and caching pages. This project introduced the concepts of parallelism, concurrency, and client server architecture.

We wrote thread buffers for caching pages, reasoning about thread safety of data structures, and the balance between fine and course grained locking on code complexity and performance.

I then wrote a server which would respond to network connections over a socket and assign individual threads to each socket, communicating over an encrypted connection using JSON formatted messages.

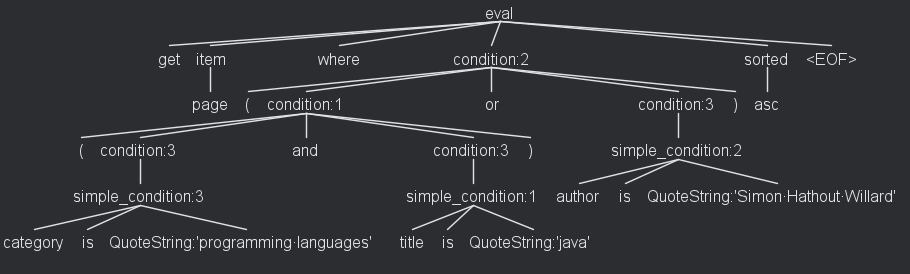

Finally, I learned how to use ANTLR to write and parse grammars, to respond to structured queries, and fetch the expected results from Wikipedia. In writing this, I also learned more about how build systems such as Gradle operate, and how to manage dependencies on other libraries and frameworks.

Below is a visualization of my grammar parsing the query “get page where ((category is ‘programming languages’ and title is ‘java’) or author is ‘Simon Hathout Willard’) asc”

Conclusion

Ultimately, CPEN 221 has been a fantastic opportunity for me to learn the fundamental coding skills that would allow me to work as part of a team developing programs for real world applications. By learning how to write modular and testable code, I’m able to not only write correct code, but also show to others its correctness, maintainability, and performance. This course is notoriously difficult to get a high grade in, but through immense effort, and asking good questions, I am happy to say I got a 98%